Serverless APIs Full Guide - Part 2 (TDD & DynamoDB)

Let's learn how to build an API with Test Driven Development (TDD) & DynamoDB as DB in Node.js

Introduction

Currently I'm working in a company as a Lead Solutions Architect and one of the problems that we were facing when we're developing a new software is that we're pretty fast in the first 80% of the project, but towards the end of the project the development team is heavily slowed down because we start to experience a significant amount of bugs, that is in reality pretty simple, but keep us busy on bug fixing instead of focusing on delivering the complete system functionalities. The solution that came into mind is that now I'm encouraging the development team to adopt the culture of the TDD (Test Driven Development) so now instead of just focus coding we're going to first focus on how we're going to validate every business requirement and its respective automated test cases and then and only then start coding. In the first instance, it could sound as an increment of work, but if we think it right, we're spending more time at first to greatly reduce those bugs in order to deliver the project faster.

This is the 2nd part of the Serverless API Series and as a mentioned above, we're going to cover TDD so I prepared a basic project of a TODO API and we're going to review the data model, test cases and hands on coding so you could apply this practice on your next project.

TDD

So let's review this brief example, that I prepared for this part of the series, it's a simple TODO API for task assignments the goal here is that you can extrapolate this simple example into a real world project with hundreds or even thousands of test cases.

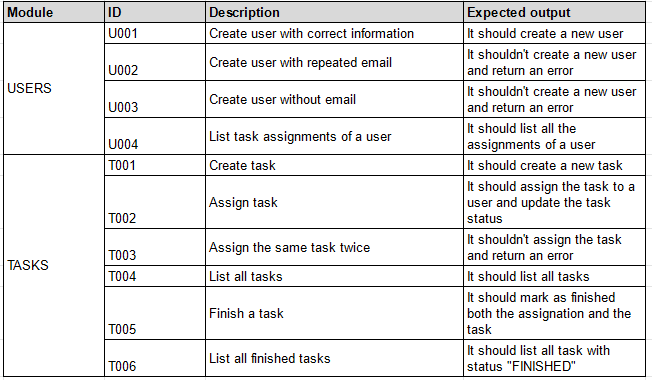

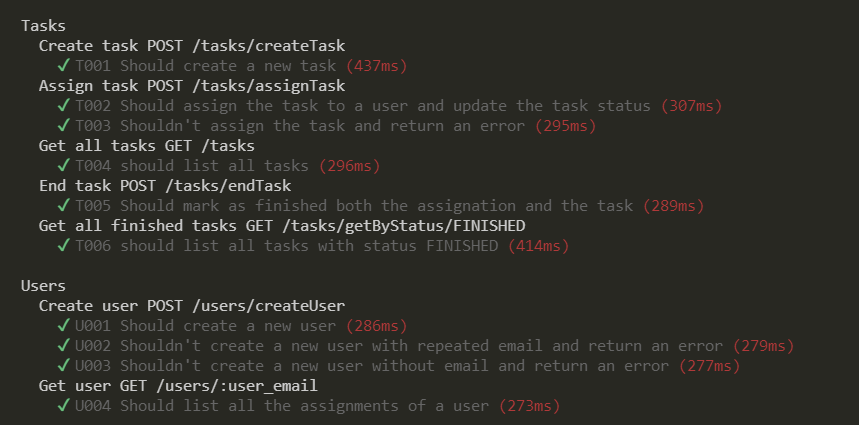

So let's review the test cases:

As we can see in the image above, we have 11 test cases which involve the creation of a user, the creation of a task and the assignment of a task with a couple of queries as well. Then now that we have an idea of the functionality that the API should have we're going to go ahead and design the database but as we're in the Serverless API Series I chose a serverless database as well so lets first have a brief introduction of DynamoDB.

DynamoDB Introduction

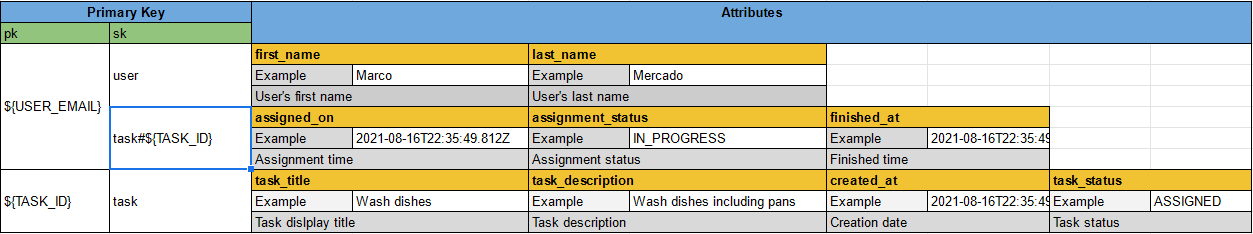

DynamoDB is the NoSQL managed database service of AWS is fast, flexible, scalable and obviously serverless database that can handle 10 trillion request per day and can support peaks of more than 20 million requests per second according to the AWS Docs. Something that I really like about DynamoDB is that is a lot more flexible than other NoSQL database options because you can either simply use it as a Key-Value document store or expand its functionality into a Single Table Design thanks to its composite primary key. Let me explain this a little bit more, in DynamoDB we have a Primary key that could be composed of 2 attributes, the Partition Key(pk) and the Sort Key (sk), so we have a lot more flexibility to play with because we can literally partition the database into the data schemes that we need like we're going to do in this example:

As we can see in the above image for this example we're going to have 2 partition keys(user_email, task_id) with 3 different schemas (user, user_task, task) so every different task and every different email will be a different partition. In the practice, we're going to see something like this:

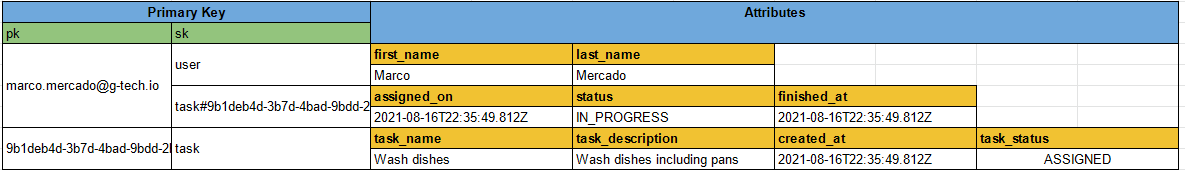

In the above image we can see how we have a partition user (marco.mercado@g-tech.io) that have its user attributes by the sort key "user" and also have an assignment of a task and its assignment attributes, in the same way we have a task_id partition with the sort key "task" and its respective task attributes.

Hands On!

A little bit of refactor

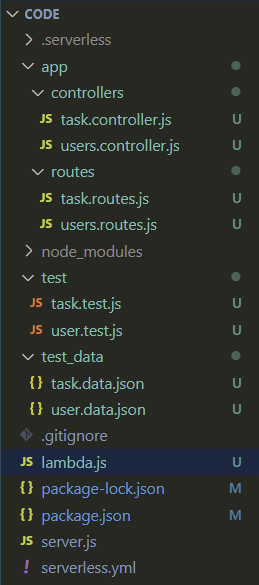

Now that we understood what we're going to do, it's time to start coding (if you don't have the code you could take a step back and do the Part 1 of the series or download it from my GitHub). First of all we're going to create a couple of new files:

Then the dependencies needed:

npm i aws-sdk chai chai-http mocha uuidAnd now a little bit of refactor of our project, in the first part in the server.js file we exported a serverless version of our app, but now in order to use chai library to test our app before the deployment we're going to change a couple of things, first go ahead and change the module.exports.handler = serverless(app) to module.exports = app then in the lambda.js file that we've just created in the last step we write the following code to import and package our app for a serverless deployment:

const serverless = require('serverless-http')

const app = require('./server')

module.exports.handler = serverless(app)And finally, in order to keep our deployment working properly, we change our handler in the serverless.yml file from handler: server.handler to handler: lambda.handler and we're good to start.

Test Data

We're going to start building our testing data in a JSON file with its respective test case identifier. First for the users:

{

"U001": {

"data": {

"email": "marco.mercado@mail.com",

"first_name": "Marco",

"last_name": "Mercado",

"test_data": true

}

},

"U002": {

"data": {

"email": "marco.mercado@mail.com",

"first_name": "Marco",

"last_name": "Mercado",

"test_data": true

}

},

"U003": {

"data": {

"first_name": "Marco",

"last_name": "Mercado",

"test_data": true

}

},

"U004": {

"data": {

"user": "marco.mercado@mail.com"

}

}

}And then for the tasks:

{

"T001": {

"data": {

"task_title": "Clean the kitchen",

"task_description": "The assignment is to clean the kitchen this includes wash the dishes, clean the stove, sweep and mop the floor",

"test_data": true

}

},

"T002": {

"data": {

"user": "marco.mercado@mail.com",

"task_id": "STORED",

"test_data": true

}

},

"T003": {

"data": {

"user": "marco.mercado@mail.com",

"task_id": "STORED",

"test_data": true

}

},

"T004": {},

"T005": {

"data": {

"task_id": "STORED",

"user_email": "marco.mercado@mail.com",

"test_data": true

}

},

"T006": {

"data": {

"status": "FINISHED"

}

}

}In this case we don't know what ID will have the tasks that we create and because of that we should store those IDs and in the data we indicate that the task_id should be in a store variable, also we mark in both files the data as "test_data" to clean it up after testing.

Test Cases

For the test cases we're going to use 2 libraries, Mocha and Chai with those libraries and the test data we're going to construct our test cases, but now in code, so let's first start with the users one in the users.test.js.

var chai = require("chai");

var chaiHttp = require("chai-http");

var app = require("../server");

var TEST_DATA = require("../test_data/users.data.json");

chai.use(chaiHttp)

chai.should()

describe("Users", function () {

describe("Create user POST /users/createUser", function () {

it("U001 Should create a new user", (done) => {

let user = TEST_DATA["U001"].data

chai.request(app)

.post('/users/createUser')

.send(user)

.end((err, res) => {

res.should.have.status(200)

// DynamoDB returns how many capacity units we use, since we only write 1 record in this transaction it should be 1

res.body.should.have.property('CapacityUnits').eql(1)

done()

})

})

it("U002 Shouldn't create a new user with repeated email and return an error", (done) => {

let user = TEST_DATA["U002"].data

chai.request(app)

.post('/users/createUser')

.send(user)

.end((err, res) => {

res.should.have.status(500)

done()

})

})

it("U003 Shouldn't create a new user without email and return an error", (done) => {

let user = TEST_DATA["U003"].data

chai.request(app)

.post('/users/createUser')

.send(user)

.end((err, res) => {

res.should.have.status(500)

done()

})

})

})

describe("Get user GET /users/:user_email", function() {

it("U004 Should list all the assignments of a user", (done) => {

let user = TEST_DATA["U004"].data

chai.request(app)

.get(`/users/getUserWithAssignments/${user.user}`)

.end((err, res) => {

res.should.have.status(200)

res.body.should.have.property('user').to.be.an('object')

res.body.should.have.property('assignments').to.be.an('array')

done()

})

})

})

})As we can notice the "describe" operations are used to wrap the content of our cases, in the same way that we use the first one to separate our "Users" test cases we use the secondary ones to separate which http method we're testing. The "It" operations are the unit testing that we're currently running and with chai we call the corresponding http method and we run assertions against the result.

Now the tasks.test.js:

var chai = require("chai");

var chaiHttp = require("chai-http");

var app = require("../server");

var TEST_DATA = require("../test_data/tasks.data.json");

var store = {}

chai.use(chaiHttp)

chai.should()

describe("Tasks", function () {

describe("Create task POST /tasks/createTask", function () {

it("T001 Should create a new task", (done) => {

let task = TEST_DATA["T001"].data

chai.request(app)

.post('/tasks/createTask')

.send(task)

.end((err, res) => {

res.should.have.status(200)

res.body.should.have.property('task_id')

res.body.should.have.property('CapacityUnits').eql(1)

store["T002"] = { task_id: res.body.task_id }

store["T003"] = { task_id: res.body.task_id }

store["T005"] = { task_id: res.body.task_id }

done()

})

})

})

describe("Assign task POST /tasks/assignTask", function () {

it("T002 Should assign the task to a user and update the task status", (done) => {

let task = TEST_DATA["T002"].data

task.task_id = (task.task_id == "STORED") ? store["T002"].task_id : task.task_id

chai.request(app)

.post('/tasks/assignTask')

.send(task)

.end((err, res) => {

res.should.have.status(200)

// Since we're using DynamoDB transactions here the capacity consumed its doubled in size so 2 records corresponds to 4 units

res.body.should.have.property("CapacityUnits").eql(4)

res.body.should.have.property("userAssigned").eql(task.user)

res.body.should.have.property("taskAssigned").eql(task.task_id)

done()

})

})

it("T003 Shouldn't assign the task and return an error", (done) => {

let task = TEST_DATA["T003"].data

task.task_id = (task.task_id == "STORED") ? store["T003"].task_id : task.task_id

chai.request(app)

.post('/tasks/assignTask')

.send(task)

.end((err, res) => {

res.should.have.status(500)

done()

})

})

})

describe("Get all tasks GET /tasks", function () {

it("T004 should list all tasks", (done) => {

let task = TEST_DATA["T004"].data

chai.request(app)

.get('/tasks/all')

.end((err, res) => {

res.should.have.status(200)

chai.expect(res.body).to.be.an('array')

res.body.forEach(t => {

t.should.have.property('sk').eql('task')

})

done()

})

})

})

describe("End task POST /tasks/endTask", function () {

it("T005 Should mark as finished both the assignation and the task", (done) => {

let task = TEST_DATA["T005"].data

task.task_id = (task.task_id == "STORED") ? store["T005"].task_id : task.task_id

chai.request(app)

.post('/tasks/endTask')

.send(task)

.end((err, res) => {

res.should.have.status(200)

res.body.should.have.property("CapacityUnits").eql(4)

done()

})

})

})

describe("Get all finished tasks GET /tasks/getByStatus/FINISHED", function () {

it("T006 should list all tasks with status FINISHED", (done) => {

let task = TEST_DATA["T006"].data

chai.request(app)

.get(`/tasks/getByStatus/${task.status}`)

.end((err, res) => {

res.should.have.status(200)

chai.expect(res.body).to.be.an('array')

res.body.forEach(t => {

t.should.have.property('task_status').eql(task.status)

})

done()

})

})

})

})

API

First before we start the coding we're going to use the AWS CLI to create our DynamoDB table with the next command: aws dynamodb create-table --table-name ServerlessSeries --attribute-definitions 'AttributeName=pk,AttributeType=S' 'AttributeName=sk,AttributeType=S' --key-schema 'AttributeName=pk, KeyType=HASH' 'AttributeName=sk, KeyType=RANGE' --provisioned-throughput 'ReadCapacityUnits=5, WriteCapacityUnits=5' --region 'us-east-1' and then we proceed to create our controllers, this controllers is where all the business logic it's going to be.

First our users.controller.js:

const AWS = require('aws-sdk')

var ddb = new AWS.DynamoDB.DocumentClient({ region: 'us-east-1' })

exports.createUser = (req, res) => {

let user = {

pk: req.body.email,

sk: 'user',

first_name: req.body.first_name,

last_name: req.body.last_name,

test_data: req.body.test_data,

}

let params = {

TableName: 'ServerlessSeries',

Item: user,

ConditionExpression: 'attribute_not_exists(pk)',

ReturnConsumedCapacity: 'TOTAL'

}

ddb.put(params, function (err, data) {

if (err) res.status(500).send(err)

else res.status(200).send(data.ConsumedCapacity)

})

}

exports.getUserWithAssignments = (req, res) => {

let params = {

TableName: 'ServerlessSeries',

ExpressionAttributeNames: {

'#pk': 'pk'

},

ExpressionAttributeValues: {

':pk': req.params.email

},

KeyConditionExpression: '#pk = :pk'

}

ddb.query(params, function (err, data) {

if (err) res.status(500).send(err)

else {

let result = {

user: {},

assignments: []

}

data.Items.forEach(i => {

if (i.sk == 'user') result.user = i

else result.assignments.push(i)

})

res.status(200).send(result)

}

})

}Now our tasks.controller.js:

const AWS = require('aws-sdk')

const UUID = require('uuid')

var ddb = new AWS.DynamoDB.DocumentClient({ region: 'us-east-1' })

exports.createTask = (req, res) => {

let task_id = UUID.v4()

let task = {

pk: task_id,

sk: 'task',

task_title: req.body.task_title,

task_description: req.body.task_description,

test_data: req.body.test_data

}

let params = {

TableName: 'ServerlessSeries',

Item: task,

ReturnConsumedCapacity: 'TOTAL'

}

ddb.put(params, (err, data) => {

if (err) res.status(500).send(err)

else res.status(200).send({

CapacityUnits: data.ConsumedCapacity.CapacityUnits,

task_id: task_id

})

})

}

exports.assignTask = (req, res) => {

let assignment = {

pk: req.body.user,

sk: `task#${req.body.task_id}`,

assignment_status: 'IN_PROGRESS',

finished_at: null,

assigned_on: new Date().toISOString(),

test_data: true

}

let params = {

TransactItems: [

{

Update: {

Key: {

pk: req.body.task_id,

sk: 'task'

},

ExpressionAttributeValues: {

':task_status': 'ASSIGNED'

},

UpdateExpression: 'SET task_status = :task_status',

TableName: 'ServerlessSeries'

},

},

{

Put: {

Item: assignment,

ConditionExpression: 'attribute_not_exists(sk)',

TableName: 'ServerlessSeries'

}

}

],

ReturnConsumedCapacity: 'TOTAL'

}

ddb.transactWrite(params, function(err, data) {

if (err) res.status(500).send(err)

else {

res.status(200).send({

CapacityUnits: data.ConsumedCapacity[0].CapacityUnits,

userAssigned: req.body.user,

taskAssigned: req.body.task_id

})

}

})

}

exports.getAllTasks = (req, res) => {

let params = {

TableName: 'ServerlessSeries',

ExpressionAttributeValues: {

':sk': 'task'

},

FilterExpression: 'sk = :sk'

}

ddb.scan(params, function(err, data) {

if (err) res.status(500).send(err)

else res.status(200).send(data.Items)

})

}

exports.endTask = (req, res) => {

let params = {

TransactItems: [

{

Update: {

Key: {

pk: req.body.task_id,

sk: 'task'

},

ExpressionAttributeValues: {

':task_status': 'FINISHED'

},

UpdateExpression: 'SET task_status = :task_status',

TableName: 'ServerlessSeries'

},

},

{

Update: {

Key: {

pk: req.body.user_email,

sk: `task#${req.body.task_id}`

},

ExpressionAttributeValues: {

':assignment_status': 'FINISHED',

':finished_at': new Date().toISOString()

},

UpdateExpression: 'SET assignment_status = :assignment_status, finished_at = :finished_at',

TableName: 'ServerlessSeries'

}

}

],

ReturnConsumedCapacity: 'TOTAL'

}

ddb.transactWrite(params, function(err, data) {

if (err) res.status(500).send(err)

else res.status(200).send(data.ConsumedCapacity[0])

})

}

exports.findByStatus = (req, res) => {

let params = {

TableName: 'ServerlessSeries',

ExpressionAttributeValues: {

':task_status': req.params.task_status

},

FilterExpression: 'task_status = :task_status'

}

ddb.scan(params, function(err, data) {

if (err) res.status(500).send(err)

else res.status(200).send(data.Items)

})

}As we should notice there are a several DynamoDB operations in every method I wrote, to know more detail about DynamoDB operations in the Node.js AWS SDK, please refer to this link, besides the DynamoDB operations there are 2 parameters that are important to notice in express framework, the first one is req that contains all of the http request information such as the headers, body, parameters, cookies, etc. and the second one is res that is a parameter that provides us the functionality of response information from the server to the client, we're going to use res in order to set the status and the information of the server response.

Once we code our controllers, it's time to route those controllers to its corresponding routes, so first lets look our users.routes.js:

module.exports = (app) => {

const usersController = require('../controllers/users.controller')

var router = require('express').Router()

router.post('/createUser', usersController.createUser)

router.get('/getUserWithAssignments/:email', usersController.getUserWithAssignments)

app.use('/users', router)

}And our tasks.routes.js:

module.exports = (app) => {

const taskController = require('../controllers/tasks.controller')

var router = require('express').Router()

router.post('/createTask', taskController.createTask)

router.post('/assignTask', taskController.assignTask)

router.post('/endTask', taskController.endTask)

router.get('/all', taskController.getAllTasks)

router.get('/getByStatus/:task_status', taskController.findByStatus)

app.use('/tasks', router)

}Here is pretty simple, basically we're routing a specific combination of http method and path with its respective parameters into a controller method.

Now that our routes are completed we need to reference those routers in the server.js file:

const express = require('express')

let app = express()

app.use(express.json())

app.get('/', function(req, res) {

res.send('Hello world')

})

const PORT = 3000

app.listen(PORT, function() {

console.log(`App listening on http://localhost:${PORT}`)

})

require('./app/routes/users.routes')(app)

require('./app/routes/tasks.routes')(app)

module.exports = appWe're almost there, now in the package.json file in the scripts section replaces the previous test attribute with "test": "mocha --exit" and finally it's time to run our test cases against our code with the command npm run test and if you did everything right your output should be looking something like this:

Further Steps

Nice! Now our code is tested and ready to provide functionality, for the last steps we need a couple of things, first of all it's nice to have a clean up routine, that is why we added the attribute test_data to all of our test data, I made a new file called cleanUp.js and execute it after the test is done by adding in the test command in my package.json the following "test": "mocha --exit && node cleanUp.js" and my code looks like this:

const AWS = require('aws-sdk')

var ddb = new AWS.DynamoDB.DocumentClient({ region: 'us-east-1' })

console.log("Starting clean up routine")

let done = 0

let params = {

TableName: 'ServerlessSeries',

ExpressionAttributeValues: {

':test_data': true

},

FilterExpression: 'test_data = :test_data'

}

ddb.scan(params, function (err, data) {

if (err) console.log("Error cleaning up")

else {

let items = data.Items

data.Items.forEach(i => {

let p = {

TableName: "ServerlessSeries",

Key: {

pk: i.pk,

sk: i.sk,

},

};

ddb.delete(p, function (err, data) {

if (err) console.log(err);

else {

done++;

if (done == items.length) {

console.log("Successfully cleaned test data");

}

}

});

})

}

})The second thing to consider is that we need to modify the serverless.yml to add the required permissions to interact with DynamoDB, remember that in our local environment that interaction is performed in our behalf but in the AWS Cloud the best practice is to assign an IAM Role to the compute entity (In this case Lambda) with the required permissions, so the file should look like this:

service: API-Serverless-Series

provider:

name: aws

runtime: nodejs12.x

memorySize: 128

stage: dev

region: us-east-1

iamRoleStatements:

- Effect: Allow

Action:

- dynamodb:PutItem

- dynamodb:UpdateItem

- dynamodb:Query

- dynamodb:Scan

- dynamodb:GetItem

- dynamodb:Delete

Resource:

- 'arn:aws:dynamodb:us-east-1:<AWS_ACCOUNT_ID>:table/ServerlessSeries'

- 'arn:aws:dynamodb:us-east-1:<AWS_ACCOUNT_ID>:table/ServerlessSeries/*'

functions:

app:

handler: lambda.handler

events:

- http: ANY /

- http: 'ANY {proxy+}'The last consideration is that the operation ddb.scan that have some limitations, if the data scanned exceeds the maximum size limit of 1 MB, the scan stops and results are returned normally, but the response will be an extra attribute named LastEvaluatedKey it contains the value to continue the scan in a subsequent operation so, in our next operation we can include that key as ExclusiveStartKey to continue scanning our database, take in count that the best practice is to design everything to use always ddb.query and you can lean on Global Secondary Indexes in order to improve your queries, if you like let me know in socials if you would like me to do a detailed post of DynamoDB design and operations.

And if you made it this far I will like to say thank you, I'm putting a certain amount of effort in writing this blog so if you find it useful or interesting please consider sharing and I will see you the next time!.