Cloud Scalability

Learn why "Cloud Computing" changed the game by optimizing costs and improving performance and availability

Introduction

Cloud Computing is a topic that every time we hear more and more, but "why the cloud is a big deal?" well, there are many things that makes it great, but for this occasion, we're going to focus in the scalability, that in a nutshell, is the way that we expand or subtract our IT resources to meet our business demand, before we get started with the scalability let's first check a couple of terms and what is the problem with the infrastructure in general.

Types of Scaling

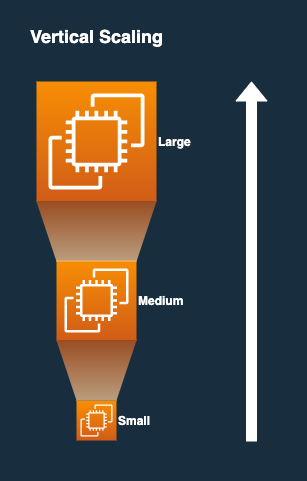

Vertical Scaling is a technique in which we upgrade the computing capabilities of our current infrastructure.

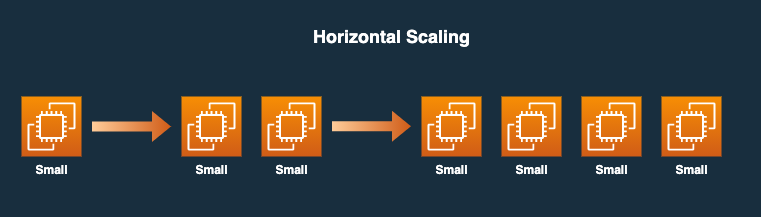

Horizontal Scaling is when instead of adding more computing capabilities to our current infrastructure, we add more agents to distribute the traffic.

Autoscaling is monitoring certain metric (like % of CPU Utilization) to automatically trigger the scaling process when a threshold value is surpassed.

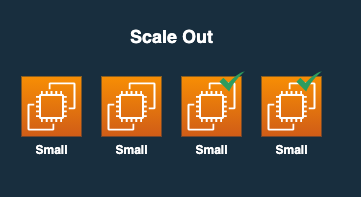

Scale Out is used when you need more capacity so you expand your current infrastructure.

Scale In is the opposite of Scale Out, when you don't need a certain amount of infrastructure you can decrease your capacity to optimize costs.

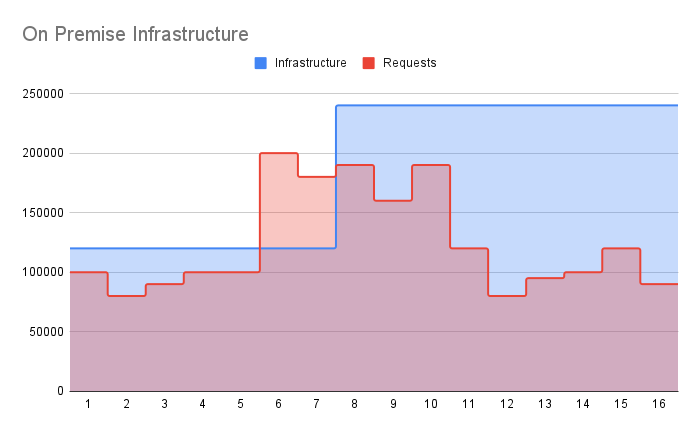

Traditional Infrastructure

Let me explain a little bit the graphic above because I made the upcoming graphics in the same format, first in the X axis we have the time in days, the Y axis represents users requests as a unit of measure, the red area represents the actual requests that the infrastructure is receiving and the blue area is the maximum amount of requests that the infrastructure can handle. As you can observe in the day 6 we have a sudden spike in traffic of 200k requests that surpasses our provisioned infrastructure, so quickly the IT team starts working in the solution by provisioning more infrastructure, but it can take a couple of days or even weeks (in a good scenario could be hours) depending the case, for this one let's assume that the scaling took 2 days but now you can handle 240k requests that is the double of the original capacity, and this could sound fine, but now we have another problem, in day 12 the traffic decreased to its original behavior, but we already acquired a new server and we would like to be ready in case of a sudden spike of traffic happen again, so we end paying and managing more infrastructure than we need.

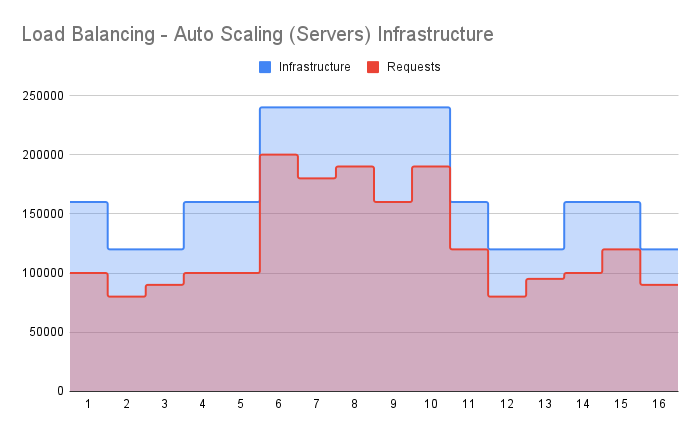

Cloud Infrastructure

As we saw in traditional infrastructure we're looking to tight the blue and the red area, but keeping always the blue one above with the purpose of optimizing cost and prevent a crash by a sudden spike of traffic, if you paid attention to the concepts above, you can tell that you can optimize costs by scaling in, be prepared for sudden spikes of traffic by scaling out and automate those actions by setting up an autoscaling process, if you apply the previous concepts you will have a highly scalable infrastructure.

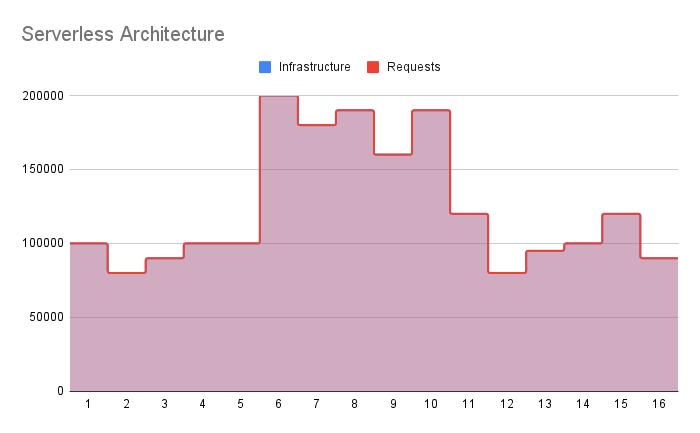

Serverless

Maybe you're wondering what happened with the blue area of the image above, but what if I tell you that is still there, would you believe me? , I hope you do because serverless is changing the game right now, there's no way that you tight your demand with your infrastructure capacity with server based infrastructure as serverless does. In essence serverless is a model that the main cloud providers are currently encouraging us to adopt by providing us powerful and flexible services in order to remove the overhead of managing infrastructure. They're offering serverless solutions for storage, database, monitoring, logging, event orchestration, computing, data streaming, analytics and the lists goes on.

So if you're interested in learning more about serverless I will let you a few links below and see you the next time!