AWS Step Functions Workflow - AWS SAM example

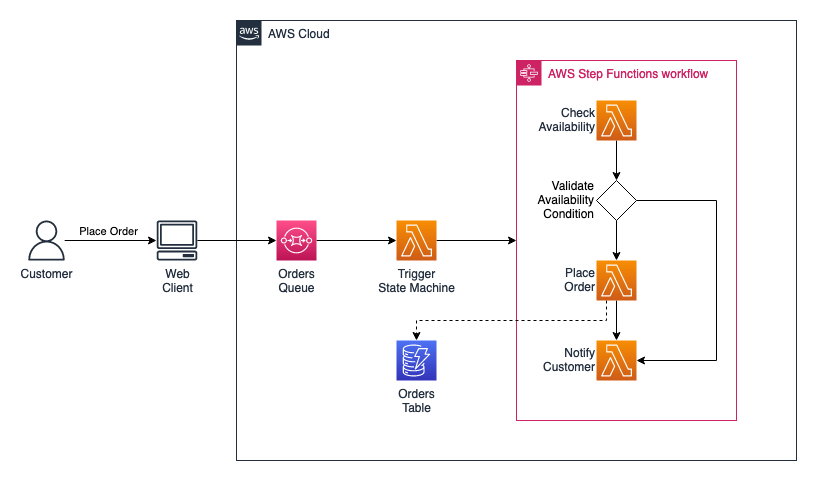

On this post we're going to build an AWS Step Functions state machine that simulates an ordering process made by de customer.

Introduction

As I mentioned in the description, we're going to build an AWS Step Functions state machine that simulates an ordering process made by de customer, the cool thing is that I'm going to show you how to write it in an AWS SAM (Serverless Application Model) template but, it's important that you understand the architecture first before you can take advantage of the example.

Architecture

For this example I've chosen a common use case, the goal is that the customer place an order to purchase a product to a queue, a service checks the availability and if the product requested is available places the order and finally notifies the customer. In the example we're going to oversimplify some of the functions to focus on the goal of you being able to understand how to integrate AWS Step Functions to your solution by taking advantage of the AWS SAM.

Requirements

- AWS Account

- AWS CLI installed & configured

- AWS SAM CLI installed & configured

- S3 Bucket

- VS Code with the AWS Toolkit extension installed OPTIONAL

- NodeJS & NPM installed

NOTE: For this example it is necessary that you manually create an S3 bucket to store some artifacts generated by AWS SAM but, if you want to step up this example and automate the creation of the entire solution you could combine it with the DevOps guide for AWS SAM projects that I wrote in this link, just take in count that a minor adjustments and additional permissions could be required.

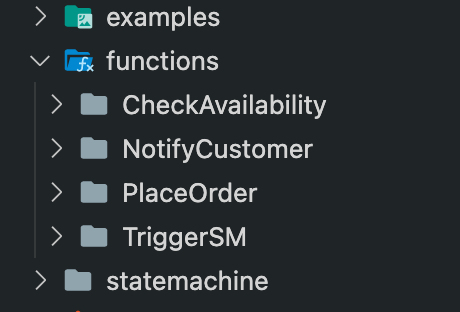

Project Structure

Before we start, lets create the folder structure inside our project:

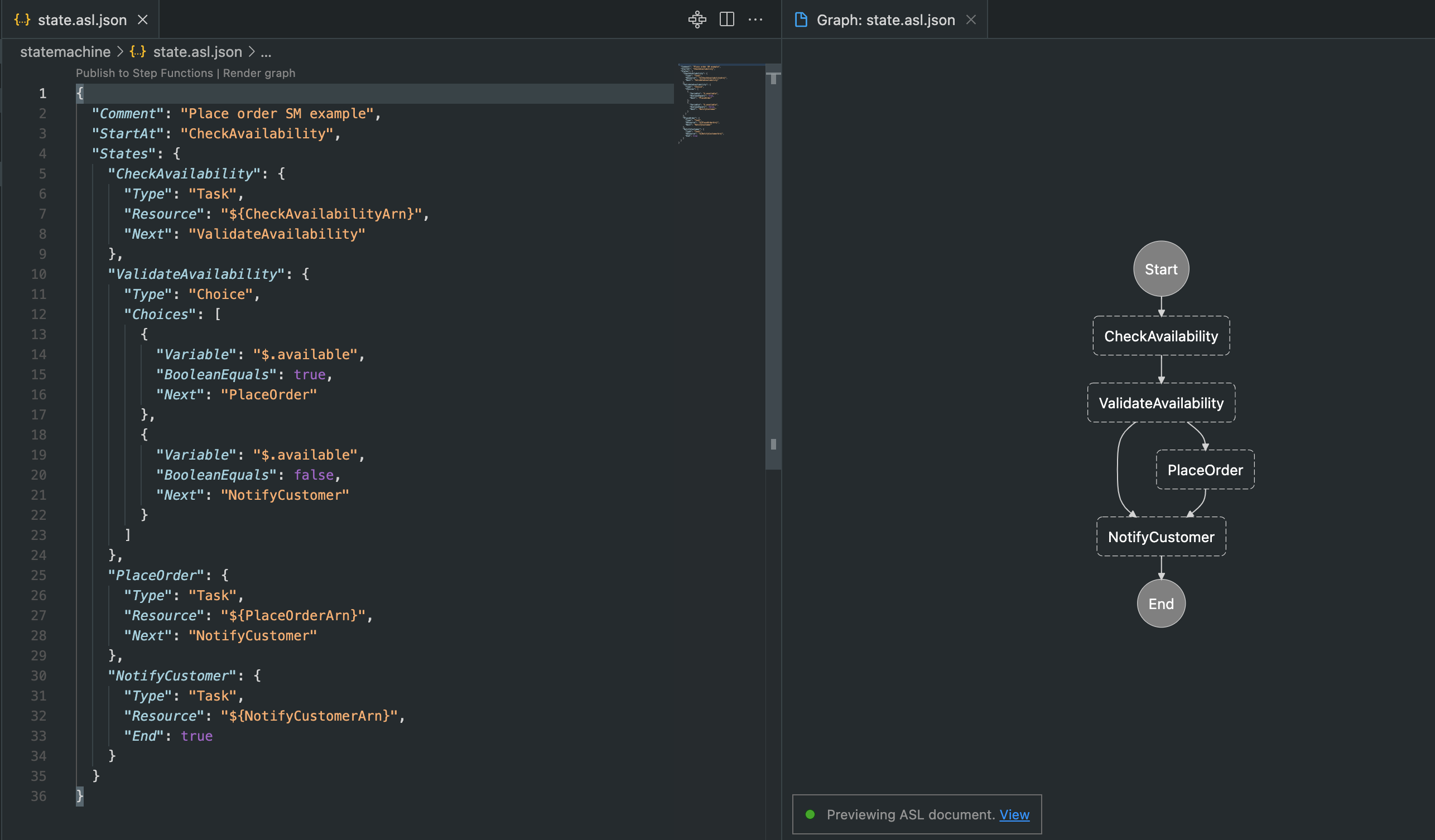

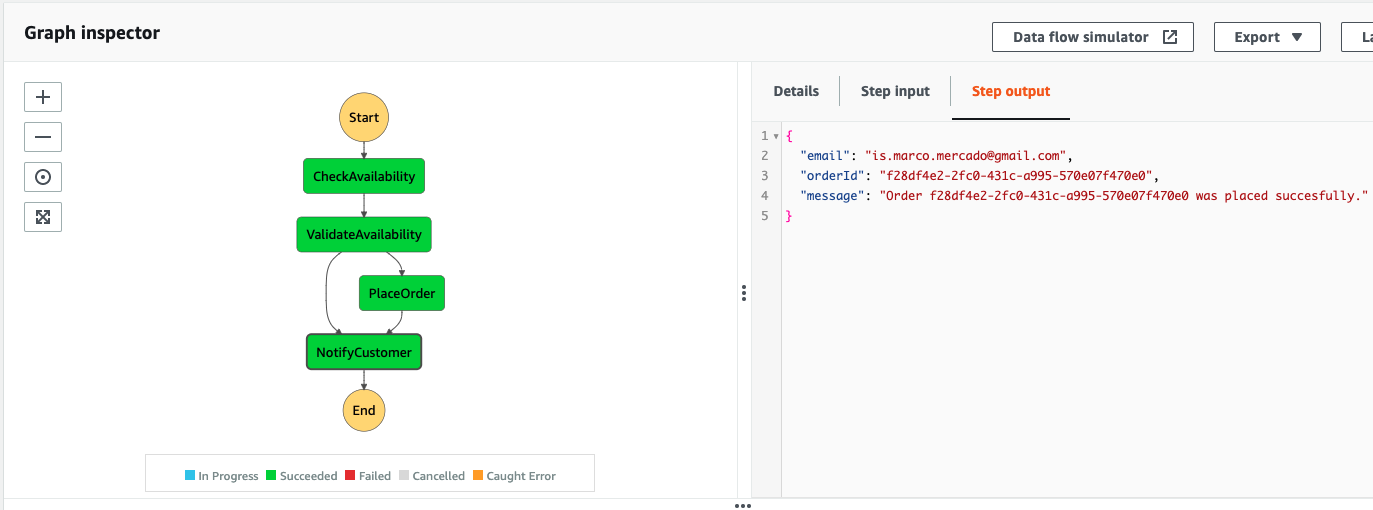

Step 1: State Machine Definition

In my personal opinion, I'd like to start with the definition of the state machine because it helps me a lot to get a picture of the state machine and understand it before start coding. So, lets understand a little bit about the state machines of AWS Step Functions, the way they work its like an interactive flow diagram, where you have a different type of resources like task, choice, map, pass, etc. every step has a type and a next step (could be the end of the state machine) and those steps have inputs and outputs, the first input would be sent by the entity that starts the state machine (in this case the function that pulls the message from the queue) and after that the input of a step would be de output of the previous step and so on. The state machine definition is written in JSON and we're going to name it state.asl.json under the /statemachine directory in our project, and we will write the following:

{

"Comment": "Place order SM example",

"StartAt": "CheckAvailability",

"States": {

"CheckAvailability": {

"Type": "Task",

"Resource": "${CheckAvailabilityArn}",

"Next": "ValidateAvailability"

},

"ValidateAvailability": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.available",

"BooleanEquals": true,

"Next": "PlaceOrder"

},

{

"Variable": "$.available",

"BooleanEquals": false,

"Next": "NotifyCustomer"

}

]

},

"PlaceOrder": {

"Type": "Task",

"Resource": "${PlaceOrderArn}",

"Next": "NotifyCustomer"

},

"NotifyCustomer": {

"Type": "Task",

"Resource": "${NotifyCustomerArn}",

"End": true

}

}

}There's a couple things that I want you to notice, first is that all of the "Resource" attributes are referencing to a variable that would be replaced by AWS SAM with the arn of the corresponding functions, also the "ValidateAvailability" state is a choice type state, that's why doesn't have the resource attribute, instead it's a conditional that takes a variable from the output of the state before and depending of its value will be the next step. For more information about the state machine definition check this link.

Additionally if you're using VS Code and installed the AWS Toolkit extension as I've mentioned before, you should be able to visualize your state machine from the editor by clicking the "Render graph" button on the superior right corner.

Step 2: Write the functions

Trigger SM Function

Unfortunately, at the moment AWS SQS doesn't support AWS Step Functions as a destination, that is why we're going to write an intermediate lambda function to trigger the state machine with the AWS SDK, so lets jump into our functions/TriggerSM directory and we initialize a new npm project inside by using the npm init -y command, that should create an standard package.json file, then we create a triggerSM.js file to write the following code:

const AWS = require('aws-sdk')

exports.handler = async (event) => {

var stepfunctions = new AWS.StepFunctions();

var params = {

stateMachineArn: process.env.SM_ARN, /* required */

input: JSON.stringify(event)

};

let response = await new Promise((resolve, reject) => {

stepfunctions.startExecution(params, function (err, data) {

if (err) reject(err); // an error occurred

else resolve(data); // successful response

});

})

return response

};As you can see it is pretty simple code, we're just taking the ARN of the state machine from an environment variable that we're going to define in our template and sending whatever that triggers our lambda function (we know that will be SQS) to the state machine.

Check Availability

We're going to initialize our NodeJS project under the functions/CheckAvailability directory in the same way that we've seen before, and we're going to create the checkAvailability.js file to write the following code:

/*

NOTE: We're going to write a simple if to simulate an validation.

In a real world example this could check the availability on DynamoDB

trough the AWS SDK, directly to MySQL or even making a request to

another service in a service oriented architecture (SOA)

*/

exports.handler = async (event) => {

let message = JSON.parse(event.Records[0].body)

let availableItemName = "Nvidia RTX 3070" // Obviously it's an example, everyone knows that there aren't graphics cards available

let available = (message.itemName == availableItemName)

const response = {

message: message,

available: available

};

return response;

};Once our state machine starts, our first step would be check the availability of the requested product, notice that the first thing that we're doing is to parse the message body from the SQS message, then we simulate a conditional to determine if the product is available and finally we send as an output the message and the availability, it is really important that you understand that whatever you return in the function will be the input of the following step.

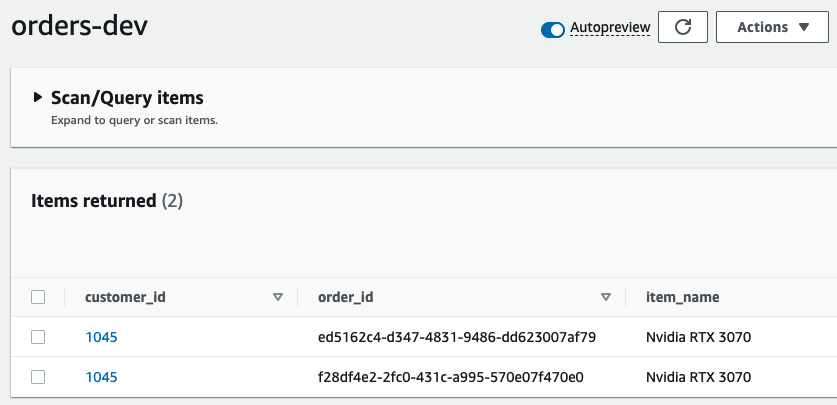

Place Order

Lets initialize our NodeJS project under the functions/PlaceOrder directory in the same way that we've seen before but this time we're going to install an additional library to generate unique ids with the npm i uuid command (remember that all of the npm commands are executed under the respective funciton directory), then we create our placeOrder.js file to write the following:

const AWS = require('aws-sdk')

const uuid = require('uuid')

const dynamodb = new AWS.DynamoDB.DocumentClient({ region: "us-east-1" })

exports.handler = (event, context, callback) => {

let orderId = uuid.v4()

let params = {

TableName: process.env.DDB_TABLE,

Item: {

customer_id: event.message.customerId,

order_id: orderId,

item_name: event.message.itemName

},

ReturnConsumedCapacity: 'TOTAL'

}

let response = {

message: event.message,

order_id: orderId,

available: event.available

}

dynamodb.put(params, (err, data) => {

if (err) {

console.log(err)

callback(err)

} else {

console.log(data)

callback(null, response)

}

})

};The first thing is to generate a unique identifier for the order, next we define the parameters of DynamoDB operation, notice that the DynamoDB table name its an environment variable and will be defined in the AWS SAM template.

NOTE: To know more about DynamoDB operations you could look at this post for examples or also the official AWS Docs.

Notify Customer

We're going to initialize our NodeJS project under the functions/NotifyCustomer directory in the same way that we've seen before, and we're going to create the notifyCustomer.js file to write the following code:

/*

NOTE: We're going to write a simple log of notification.

In a real world example this could use AWS SNS to corrrectly distribute

the notification.

*/

exports.handler = async (event) => {

let notification = {

email: event.message.customerEmail,

orderId: (event.available) ? event.order_id : null,

message: (event.available) ? `Order ${event.order_id} was placed succesfully.` : "Unfortunately we weren't able to place your order."

}

return notification

};As I mention in the note, in a real world example this function could use AWS SNS to corrrectly distribute the notification or any other service but, as this post is not about notifications and already it's a little bit long we're going to simply return a message with the order information.

Step 3: SAM Template

As this is the longest file of the example I'm going to break it a little bit, first we create a template.yml file under the root directory of our project, next we write the parameters and global settings:

AWSTemplateFormatVersion: 2010-09-09

Transform: AWS::Serverless-2016-10-31

Parameters:

Environment:

Type: String

Description: Environment that will suffix the aws resources

Globals:

Function:

Timeout: 5Now we create the resources of our solution (At this point every block of code will be indented under Resources) first our log group and the SQS queue:

Resources:

Logs:

Type: AWS::Logs::LogGroup

PlaceOrderQueue:

Type: AWS::SQS::Queue

Properties:

QueueName: !Sub PlaceOrderQueue-${Environment}Then we create our DynamoDB table:

DdbTable:

Type: AWS::DynamoDB::Table

DeletionPolicy: Delete

Properties:

TableName: !Sub orders-${Environment}

AttributeDefinitions:

- AttributeName: customer_id

AttributeType: S

- AttributeName: order_id

AttributeType: S

KeySchema:

- AttributeName: customer_id

KeyType: HASH

- AttributeName: order_id

KeyType: RANGE

BillingMode: PAY_PER_REQUEST

ProvisionedThroughput:

ReadCapacityUnits: 0

WriteCapacityUnits: 0And now our state machine, notice how in the definition substitutions property is where we define the arn that should be replaced in our state machine definition file, also notice that we must give the state machine permissions to invoke every function.

PlaceOrderStateMachine:

Type: AWS::Serverless::StateMachine

Properties:

Type: STANDARD

Name: !Sub place-order-${Environment}

DefinitionUri: statemachine/state.asl.json

Logging:

Destinations:

- CloudWatchLogsLogGroup:

LogGroupArn: !GetAtt Logs.Arn

IncludeExecutionData: true

Level: ALL

DefinitionSubstitutions:

CheckAvailabilityArn: !GetAtt CheckAvailability.Arn

PlaceOrderArn: !GetAtt PlaceOrder.Arn

NotifyCustomerArn: !GetAtt NotifyCustomer.Arn

Policies:

- LambdaInvokePolicy:

FunctionName: !Ref CheckAvailability

- LambdaInvokePolicy:

FunctionName: !Ref PlaceOrder

- LambdaInvokePolicy:

FunctionName: !Ref NotifyCustomer

- CloudWatchLogsFullAccessNow the functions, for the check availability there's nothing special just a simple lambda function:

CheckAvailability:

Type: AWS::Serverless::Function

Properties:

CodeUri: functions/CheckAvailability

Handler: checkAvailability.handler

Runtime: nodejs12.x

FunctionName: !Sub PlaceOrder-CheckAvailability-${Environment}Then the place order function, in this case we need 2 additional things, first pass the DynamoDB table name as an environment variable by referencing the DynamoDB table that we're going to create in the same template and give the necessary permissions to perform a put operation on the mentioned resource.

PlaceOrder:

Type: AWS::Serverless::Function

Properties:

CodeUri: functions/PlaceOrder

Handler: placeOrder.handler

Runtime: nodejs12.x

FunctionName: !Sub PlaceOrder-PlaceOrder-${Environment}

Environment:

Variables:

DDB_TABLE: !Ref DdbTable

Policies:

- Statement:

- Sid: DynamoDBPolicy

Effect: Allow

Action:

- dynamodb:PutItem

Resource:

- !GetAtt DdbTable.ArnNotify customer it's also a pretty simple one:

NotifyCustomer:

Type: AWS::Serverless::Function

Properties:

CodeUri: functions/NotifyCustomer

Handler: notifyCustomer.handler

Runtime: nodejs12.x

FunctionName: !Sub PlaceOrder-NotifyCustomer-${Environment}And finally, the trigger state machine function, for this one we need to specify the ARN of the state machine as environment variable by referencing the state machine that we're going to create in the same template, give the necessary permissions to start an execution of the state machine and finally create an event trigger linked to the SQS queue that also we're going to create in the same template.

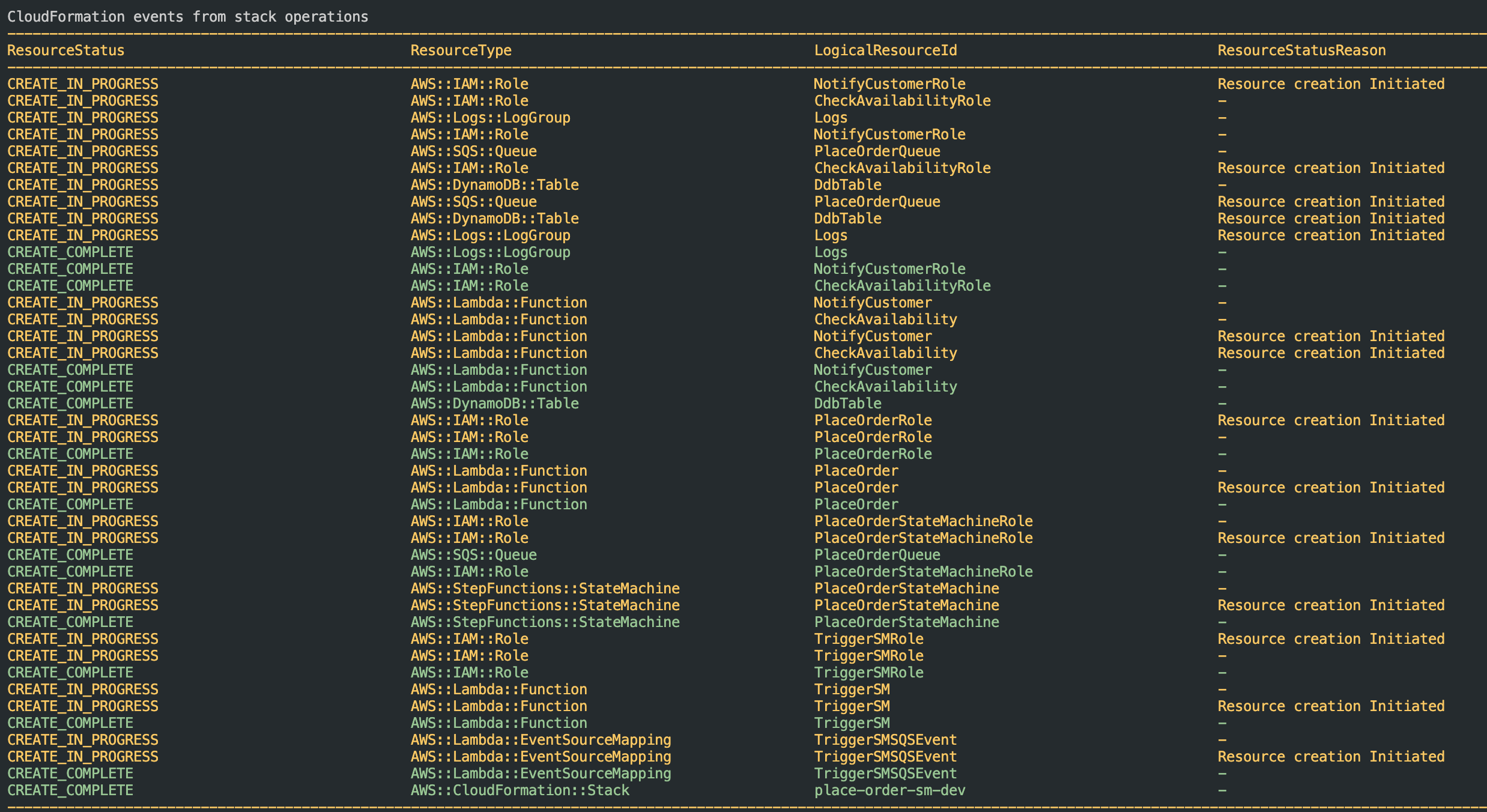

Step 4: Deploy & Test

In order to deploy this template we need 3 commands:

sam buildsam package --s3-bucket <YOUR_S3_BUCKET> --output-template-file packaged.yamlsam deploy --template-file packaged.yaml --stack-name place-order-sm-dev --capabilities CAPABILITY_IAM --parameter-overrides Environment=dev

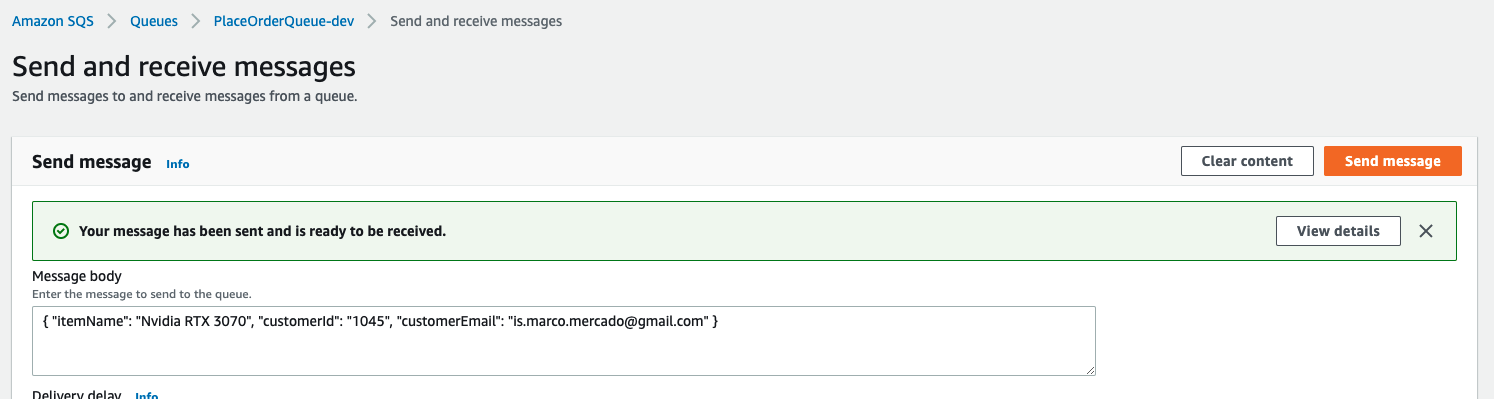

Now you can send a message over SQS and see how your state machine handles the request, I will leave you an screenshot of the message that I've sent over the AWS Console but feel free of using the AWS SDK or the CLI whatever you choose should be the same.

{ "itemName": "Nvidia RTX 3070", "customerId": "1045", "customerEmail": "is.marco.mercado@gmail.com" }

Last but not least, as I've mentioned before if you're interested into step up this example into a real world solution I will recommend you to take in count that you probably will need that your lambda functions are inside a vpc, vpc endpoints and also you can check my post about the CI/CD pipeline for AWS SAM in order to automate the deployments.

Thank you for reading! \ GitHub Repo