AWS Lambda - CI/CD with AWS SAM, CodePipeline & CloudFormation

Complete example about how to implement a CI/CD workflow from an infrastructure as a code template

Introduction

In this guide, we're going to deploy an AWS Lambda function using CodePipeline & AWS SAM to continuously integrate and deploy our code changes to the cloud, but instead of using the AWS Management Console, we're going to write the in a CloudFormation template, by doing that you can easily provision infrastructure for every development environment and also you can implement it in every AWS SAM based project.

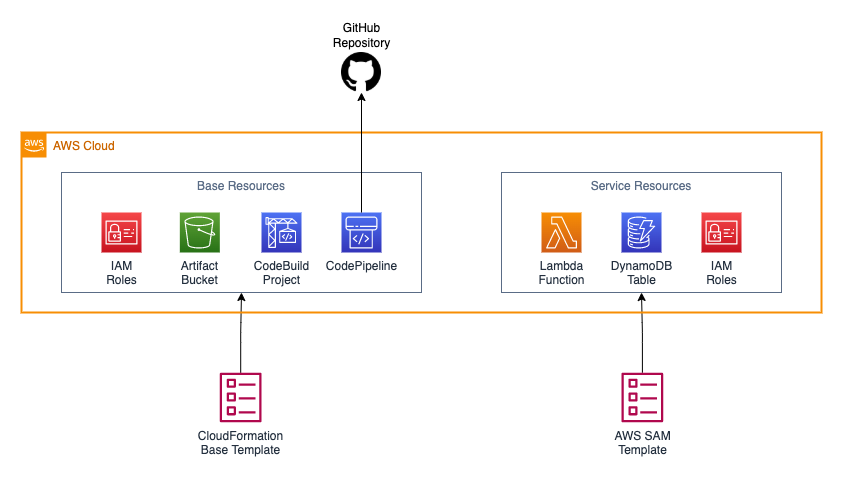

Architecture

As we can appreciate in the diagram of above, we're going to write 2 code templates, the first one will be a CloudFormation template and is going to be responsible of the base infrastructure that in this case is mainly the pipeline, the second one will be an AWS SAM template that will be the template that deploys our service infrastructure.

Tutorial

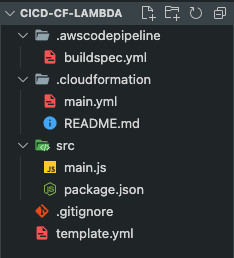

First, lets create a couple of files and directories to be in the same place:

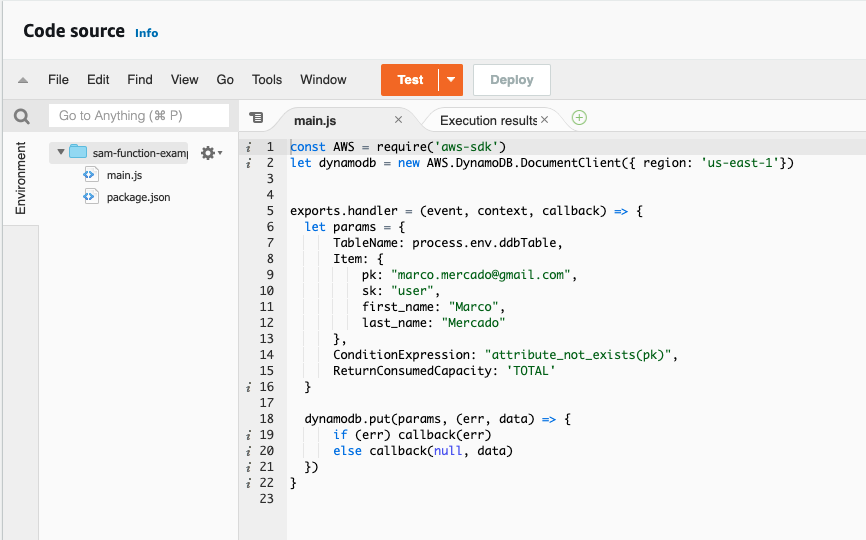

We're going to start by writing our Lambda function code on the main.js file under the /src folder:

const AWS = require('aws-sdk')

let dynamodb = new AWS.DynamoDB.DocumentClient({ region: 'us-east-1'})

exports.handler = (event, context, callback) => {

let params = {

TableName: process.env.ddbTable,

Item: {

pk: "marco.mercado@gmail.com",

sk: "user",

first_name: "Marco",

last_name: "Mercado"

},

ConditionExpression: "attribute_not_exists(pk)",

ReturnConsumedCapacity: 'TOTAL'

}

dynamodb.put(params, (err, data) => {

if (err) callback(err)

else callback(null, data)

})

}The function is pretty simple, as we've seen in the DynamoDB Operations post we're performing a put operation into a DynamoDB table with the AWS SDK for NodeJS. The only thing that I want you to notice is that in the line 7 we're calling an environment variable for the DynamoDB table, that variable it's going to be defined in the SAM template.

NOTE: The package.json file is a default one in this example, can be created by simply running npm init -y

Now let's create the template.yml file in which we're going to use AWS SAM to deploy our serverless resources:

AWSTemplateFormatVersion: "2010-09-09"

Transform: AWS::Serverless-2016-10-31

Description: >

AWS SAM Lambda function example

Parameters:

Environment:

Type: String

Description: Environment name

Globals:

Function:

Timeout: 3

Resources:

DdbTable:

Type: AWS::DynamoDB::Table

DeletionPolicy: Retain

Properties:

TableName: !Sub tutorial-dynamodb-cicd-${Environment}

AttributeDefinitions:

- AttributeName: pk

AttributeType: S

- AttributeName: sk

AttributeType: S

KeySchema:

- AttributeName: pk

KeyType: HASH

- AttributeName: sk

KeyType: RANGE

BillingMode: PAY_PER_REQUEST

ProvisionedThroughput:

ReadCapacityUnits: 0

WriteCapacityUnits: 0

MainFunction:

Type: AWS::Serverless::Function

Properties:

FunctionName: !Sub sam-function-example-${Environment}

CodeUri: src/

Handler: main.handler

Runtime: nodejs12.x

Environment:

Variables:

ddbTable: !Ref DdbTable

Policies:

- Statement:

- Sid: DynamoDBPolicy

Effect: Allow

Action:

- dynamodb:PutItem

Resource:

- !GetAtt DdbTable.Arn

Outputs:

MainFunction:

Description: "Main Lambda Function ARN"

Value: !GetAtt MainFunction.ArnThe template is going to deploy a DynamoDB table with the environment as suffix to split resources by environment and a Lambda function with an IAM Role that allows it to write into the DynamoDB table.

Nice! at this moment we could deploy our function by using the AWS SAM CLI, but that would be a manual step, what we want is that any time a developer push code into the repository those changes will be automatically built and deployed.

The service in which the AWS SAM template is going to run is CodeBuild, knowing that we need to write the step by step instructions of how to build and deploy our project with AWS SAM, those instructions will be written in the buildspec.yml file under the .awscodepipeline folder like the following code:

version: 0.2

phases:

build:

commands:

- sam build -t template.yml

post_build:

commands:

- |

sam deploy --no-confirm-changeset --no-fail-on-empty-changeset \

--s3-bucket $CODEPIPELINE_BUCKET \

--s3-prefix $STACK_NAME \

--region $AWS_REGION \

--stack-name $STACK_NAME \

--capabilities CAPABILITY_IAM \

--parameter-overrides \

Environment=$ENVIRONMENTNow we have our components to set up our automation pipeline, the last step is to write our CloudFormation template located in the main.yml file under the .cloudformation directory, this is the longest part of the example so I'm going to break it in steps, if you want to look the entire file visit the link of the GitHub repository at the end of this post.

First as every template of CloudFormation we define the version and write a brief description about the template:

AWSTemplateFormatVersion: '2010-09-09'

Description: 'CloudFormation template to create an automation pipeline for AWS SAM projects'Next, we define our parameters:

Parameters:

FunctionName:

Type: String

Description: Name of the function to deploy with the pipeline

Environment:

Type: String

Description: Environment name

GitHubRepo:

Type: String

Description: GitHub repository name

GitHubUser:

Type: String

Description: GitHub user name

GitHubOAuthToken:

Type: String

Description: GitHub auth token

Resources:Now, we're going to write which resources this template is going to create, first of all we're going to define the IAM Roles that CodePipeline and CodeBuild are going to assume in order to have the necessary permissions:

NOTE: At this point every block of code will be indented under Resources

For CodeBuild:

CodeBuildServiceRole:

Type: AWS::IAM::Role

Properties:

Path: /

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service: codebuild.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: "SAM"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- dynamodb:DescribeTable

- dynamodb:CreateTable

- dynamodb:DeleteTable

Resource: '*'

- Effect: Allow

Action:

- cloudformation:CreateChangeSet

- cloudformation:DescribeChangeSet

- cloudformation:ExecuteChangeSet

- cloudformation:DescribeStackEvents

- cloudformation:DescribeStacks

- cloudformation:GetTemplateSummary

- cloudformation:UpdateStack

Resource: '*'

- Effect: Allow

Action:

- lambda:AddPermission

- lambda:CreateFunction

- lambda:DeleteFunction

- lambda:GetFunction

- lambda:GetFunctionConfiguration

- lambda:ListTags

- lambda:RemovePermission

- lambda:TagResource

- lambda:UntagResource

- lambda:UpdateFunctionCode

- lambda:UpdateFunctionConfiguration

Resource: arn:aws:lambda:*:*:function:*

- Effect: Allow

Action:

- iam:PassRole

Resource: "*"

Condition:

StringEquals:

iam:PassedToService: lambda.amazonaws.com

- Effect: Allow

Action:

- iam:CreateRole

- iam:DetachRolePolicy

- iam:PutRolePolicy

- iam:AttachRolePolicy

- iam:DeleteRole

- iam:GetRole

Resource: "arn:aws:iam::*:role/*"

- PolicyName: "logs"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

- ssm:GetParameters

Resource: '*'

- PolicyName: "S3"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- s3:GetObject

- s3:PutObject

- s3:GetObjectVersion

Resource: !Sub arn:aws:s3:::${ArtifactBucket}/*For CodePipeline:

CodePipelineServiceRole:

Type: AWS::IAM::Role

Properties:

Path: /

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: codepipeline.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: root

PolicyDocument:

Version: 2012-10-17

Statement:

- Resource:

- !Sub arn:aws:s3:::${ArtifactBucket}/*

- !Sub arn:aws:s3:::${ArtifactBucket}

Effect: Allow

Action:

- s3:*

- Resource: "*"

Effect: Allow

Action:

- codebuild:StartBuild

- codebuild:BatchGetBuilds

- iam:PassRole

- Resource: "*"

Effect: Allow

Action:

- codecommit:CancelUplodaArchive

- codecommit:GetBranch

- codecommit:GetCommit

- codecommit:GetUploadArchiveStatus

- codecommit:UploadArchiveNext, we're going to create an S3 bucket to store our artifacts:

ArtifactBucket:

Type: AWS::S3::Bucket

DeletionPolicy: DeleteNow, we need a CodeBuild project, remember that CodeBuild is a compute instance managed by that will perform a list of steps to build your project, so we need to configure that instance:

CodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Artifacts:

Type: CODEPIPELINE

Source:

Type: CODEPIPELINE

BuildSpec: .awscodepipeline/buildspec.yml

Environment:

ComputeType: BUILD_GENERAL1_SMALL

Image: aws/codebuild/amazonlinux2-x86_64-standard:2.0

Type: LINUX_CONTAINER

EnvironmentVariables:

- Name: CODEPIPELINE_BUCKET

Type: PLAINTEXT

Value: !Ref ArtifactBucket

- Name: STACK_NAME

Type: PLAINTEXT

Value: !Sub ${FunctionName}-${Environment}

- Name: ENVIRONMENT

Type: PLAINTEXT

Value: !Ref Environment

Name: !Ref AWS::StackName

ServiceRole: !Ref CodeBuildServiceRoleFinally, we put everything together in a CodePipeline pipeline, remember that CodePipeline it's an orchestration service, in this example is coordinating the GitHub repository as a source of our code with our CodeBuild project that is going to deploy our serverless resources.

Pipeline:

Type: AWS::CodePipeline::Pipeline

Properties:

Name: !Sub ${FunctionName}-${Environment}

RoleArn: !GetAtt CodePipelineServiceRole.Arn

ArtifactStore:

Type: S3

Location: !Ref ArtifactBucket

Stages:

- Name: Source

Actions:

- Name: Function

ActionTypeId:

Category: Source

Owner: ThirdParty

Version: '1'

Provider: GitHub

Configuration:

Owner: !Ref GitHubUser

Repo: !Ref GitHubRepo

Branch: !Ref Environment

OAuthToken: !Ref GitHubOAuthToken

RunOrder: 1

OutputArtifacts:

- Name: SourceArtifact

- Name: Build

Actions:

- Name: Build

ActionTypeId:

Category: Build

Owner: AWS

Version: '1'

Provider: CodeBuild

Configuration:

ProjectName: !Ref CodeBuildProject

InputArtifacts:

- Name: SourceArtifact

OutputArtifacts:

- Name: BuildArtifact

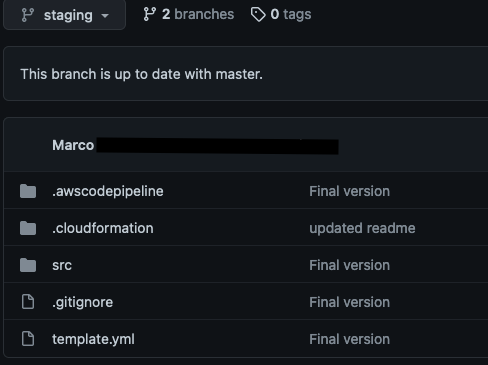

RunOrder: 2Before you deploy the project be sure that you have your code updated in a GitHub repository in a branch that matches the name of the environment that you're going to pick (ie. staging).

Now we can deploy our project with the following command using the AWS CLI:

NOTE: It is important that at this point you have the AWS CLI previously installed and your GitHub OAuth Access token, if you don't know how to generate your token or how to install the AWS CLI I'll leave you a couple of useful links.

Generate GitHub OAuth Access Token

aws cloudformation create-stack \

--stack-name lambda-cicd-post \

--template-body file://.cloudformation/main.yml \

--parameters \

ParameterKey=FunctionName,ParameterValue=<LAMBDA_FUNCTION_NAME> \

ParameterKey=Environment,ParameterValue=<ENVIRONMENT> \

ParameterKey=GitHubUser,ParameterValue=<GITHUB_USER> \

ParameterKey=GitHubRepo,ParameterValue=<REPO_NAME> \

ParameterKey=GitHubOAuthToken,ParameterValue=<GITHUB_OAUTH_TOKEN> \

--region us-east-1 \

--capabilities CAPABILITY_IAMThe following things should happen:

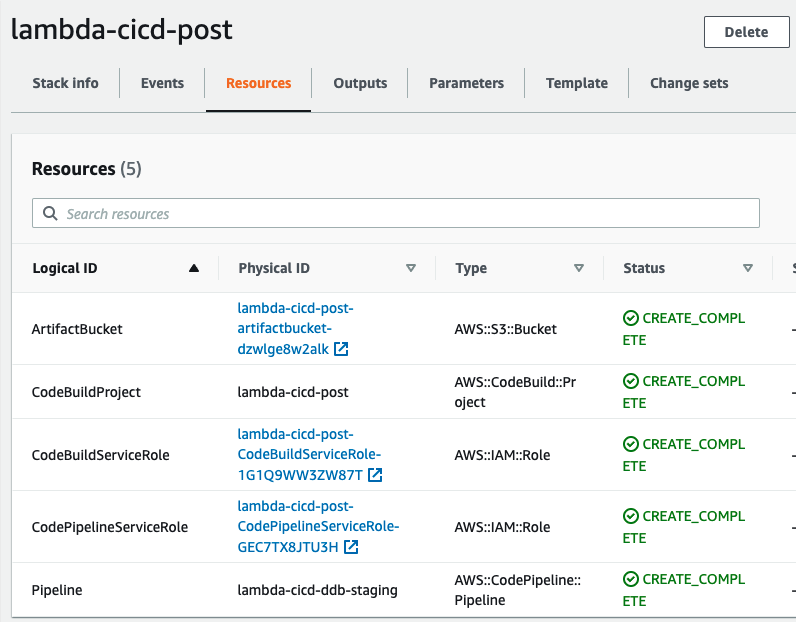

The stack should succesfully finish in CloudFormation:

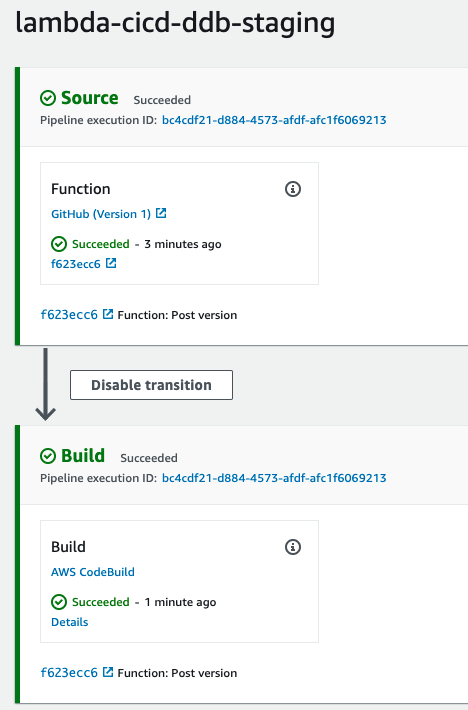

Should create a CodePipeline pipeline that immediately triggers its first run:

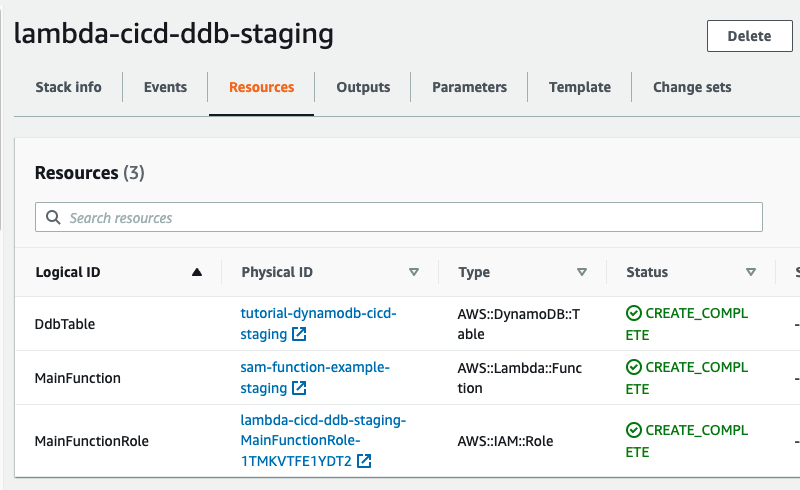

That first run should create the following stack:

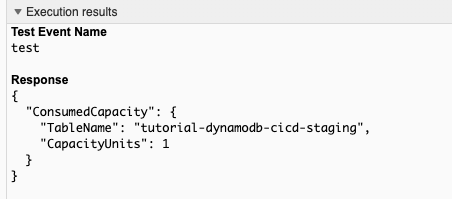

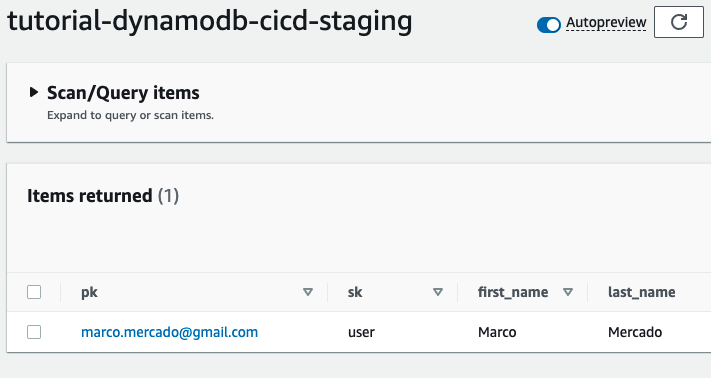

And now you should be able to test your function:

And that's it for this tutorial, remember that the entire code will be here, see you the next time!.